Using AI to create a 'conversational archive' at the National Sound and Film Archive (NSFA)

Keir Winesmith - Chief Digital Officer at the NSFA - talks through the tech infrastructure they are building and sharing with audiences and the sector at large

Today most of our digital interactions are mediated through for-profit algorithms: Google, Meta (Facebook), TikTok.

These organisations and the ways they surface content are very new, compared to the age of our cultural institutions. Although they seem very influential, in 20 years time – within the duration of our careers – Google or Meta won’t look like they do now and might not exist at all. Keir points out to me that in a future where the internet is largely AI slop, search and advertising profits are very unstable.

What I found really visionary about this article was the idea that by creating our own, shareable digital infrastructure, we – as a sector – can aid discovery in a way that sits outside of these large for-profit companies.

Over to Keir, Chief Digital Officer, who will explain how they’re doing just that with their vast archive at the National Film and Sound Archive in Australia…

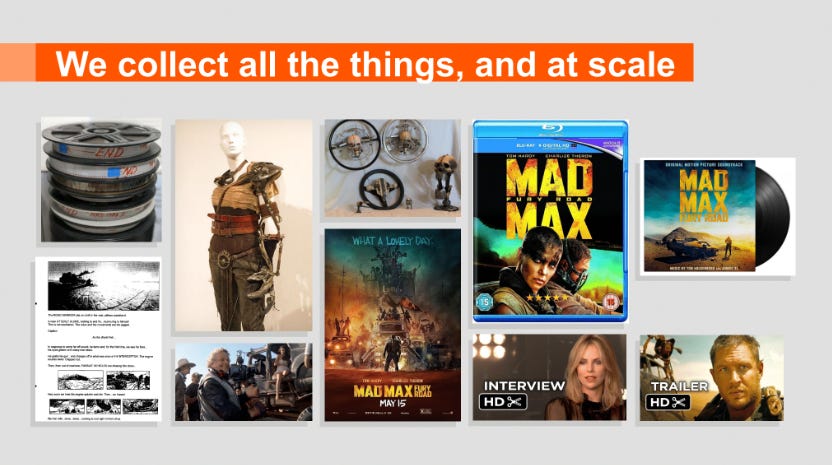

The National Film & Sound Archive of Australia (NFSA) is a little over 40 years old (in its current form) and is located in Canberra, Australia’s capital. However, the need for such an institution was identified 90 years ago and today’s NFSA is the continuation of collecting, conservation, and preservation activities since that time. The collection itself holds materials that date back to the founding moments of film and recorded sound, the beginning of broadcast television and commercial radio in Australia, through to video games, streaming media and social video being produced today.

As the industries we collect from and support have changed, so has Australia’s audiovisual archive. The media industry evolved to become more and more digital and now, in 2025, we collect more born-digital material than media we receive in any other format. Our collection is unknowably large. There are 100,000s of works made up of millions of components that could be used to tell an infinite number of stories.

Machine Learning at the NFSA

In late 2020, the NFSA began an internal pilot of a commercially available software to automate the transcription and on-screen optical character recognition of thousands and thousands of hours of film, tv, radio and oral history from the collection. The pilot was designed to test our working assumption that mass transcription and image recognition would unearth otherwise undocumented stories within the collection. For the pilot, we only included material that was not culturally restricted or otherwise sensitive. We embarked on this journey well before the explosion of interest in machine learning and AI triggered by ChatGPT.

By 2022, it was clear that the pilot was a success. This new way to search the collection became an important part of the organisation’s work. It was not without complexity however, as staff could now more easily encounter challenging or confronting material held within the collection. It was also clear that transcribing and analysing the entire collection using a fee-per-minute commercial model would be exceedingly expensive.

In early 2023 we kicked off a series of internal machine learning and AI prototyping sprints to explore the idea of a Conversational Archive. Which meant, attempting to create interfaces to discover new works in the collection, or find elements within a work (such a specific sentence, person, or idea), through a conversational interface rather than keyword search. While keyword search preferences those who know how the collection is catalogued and what all the expert terms mean, we sought to make an interface that was more intuitive, playful and human; more ‘conversational’.

Over 4 months, NFSA digital and collection staff worked on a small set of projects to help us better understand what was possible with our skills, tools and collection. Some of the pilot projects were successful, others were not.

Two pilot projects clearly showed the greatest promise: internally developed and managed mass transcription; and conversational search tools using a managed graph database of human and machine-generated data, which we now call Graph Assisted Find or Graph AF. There’s more about our work here in this recorded talk:

Before embarking on scaling these projects to an enterprise level within the NFSA we developed from the ground up a set of principles for the creation and use of machine learning and AI, guided by three key strategies: maintain trust, build effectively and transparently, and create public value. More than 30 people across different branches of organisation contributed in some way to the principles, which were published last year.

From pilot to production

Our first focus was developing a mass transcription service, something that could transcribe at scale, on our own machines, at no fees, and that could be fine-tuned on Australian accents and Australian speech such as Australian slang idioms and placenames. American transcription models trained on American speech, usually YouTube content, perform particularly badly, as do names of towns that are derived from First Nations languages and non-American slang. In this context, Australian-accented English is a low-resource language.

If a Bluey episode is 5 minutes, a Neighbours episode is 24 minutes, a radio show is 60 minutes, and an oral history is a few hours, everything born digital or digitised within the NFSA’s collections adds up to 100,000s of hours of content, representing millions and millions of people, places, moments and ideas. The service we developed has already listened to well over 10 years of content, transcribing speech and unearthing gems. This work is grounded in our principles, informed by the Australian Government’s AI frameworks, and legally and culturally vetted to ensure it is appropriate for our organisation.

We shared our progress on mass transcription at the Fantastic Futures conference that we hosted in October 2024. Fantastic Futures is the annual gathering for the international AI4LAM (AI for Libraries, Archives and Museums) community, and draws leading technologists, academics and cultural workers together to engage, share ideas and showcase their work. Videos of all sessions, including a presentation by NFSA’s Grant Heinrich on mass transcription, have recently been published on our site.

What is next?

The next steps for us, now that we have transcribed the vast majority of the NFSA’s digital collection, is to connect many different streams of work together. We are integrating mass transcription and entity extraction with a fundamentally important data modelling project that is also underway, and an improved search and discovery infrastructure, to create something exciting and new.

Our task is to create a conversational archive while maintaining the trust our collection donors, contributors and users have in us, and to help show the value of the collection to industry, educational and general public audiences. To do this, we must enable our people to develop on our own tools and services that unlock the millions and millions of stories in our care. So that the NFSA can connect Australian’s with their audiovisual heritage and help them understand the many ways that the past contains the present and the future.

Interested in a free content strategy workshop? We’re working on some new workshops and would like to test them out. They’ll be delivered in London in the next couple of months but there are likely to be remote versions at some point too.